Using Loadcatvtonpipeline made managing my data so much easier. Before, I struggled with slow processing and constant errors, but now everything runs smoothly and quickly. It saved me time and stress, and I can focus on more important tasks without worrying about my data.

Loadcatvtonpipeline is a process that helps manage data by loading, organizing, checking, and moving it smoothly through systems. It makes handling large amounts of data faster, accurate, and easier for businesses.

Learn the secrets behind Loadcatvtonpipeline and how it’s helping businesses save time, boost efficiency, and stay ahead in today’s data-driven world.

Introduction to Loadcatvtonpipeline – Learn the basics!

Loadcatvtonpipeline is a system that helps manage data by loading, organizing, checking, and transferring it smoothly between systems. It handles large amounts of data quickly and reduces errors. This makes it useful for industries like e-commerce, healthcare, and logistics.

In today’s fast-paced world, accurate and fast data processing is essential for businesses. Loadcatvtonpipeline ensures data flows smoothly, improving efficiency and saving time. It helps companies stay competitive and make smarter decisions.

Loadcatvtonpipeline is a robust system designed to manage complex data flows efficiently. It automates data integration, categorization, and transfer, ensuring smooth operations. When errors occur, they are easily identifiable, allowing for quick fixes and optimized data processing.

Why Loadcatvtonpipeline Matters in 2025 and Beyond – Discover its impact!

- Supports Big Data and AI: Handles large datasets efficiently, essential for leveraging AI and real-time analytics.

- Ensures Seamless Data Flow: Enables smooth transfer, validation, and organization of data across systems.

- Meets Modern Demands: Helps businesses manage increasing data complexity in industries like healthcare, finance, and e-commerce.

- Enhances Decision-Making: Provides accurate and reliable data for smarter, faster business decisions.

- Prepares for the Future: Scales operations to adapt to advancements in technology and growing data needs.

- Boosts Efficiency: Saves time and reduces errors, improving overall productivity in data-driven workflows.

- Strengthens Security: Incorporates encryption and validation to safeguard sensitive information.

How Loadcatvtonpipeline Enhances Data Management – Boost efficiency!

Loadcatvtonpipeline improves data management by making it faster and more organized. It helps load data efficiently from different sources without delays. By categorizing data, it ensures easy access and uses validation techniques to check for errors and maintain accuracy.

It also enables seamless data transfer between systems, keeping information secure and intact. Loadcatvtonpipeline automates tasks and scales to handle larger data volumes as businesses grow. With real-time processing, it connects systems smoothly, improving workflows and efficiency.

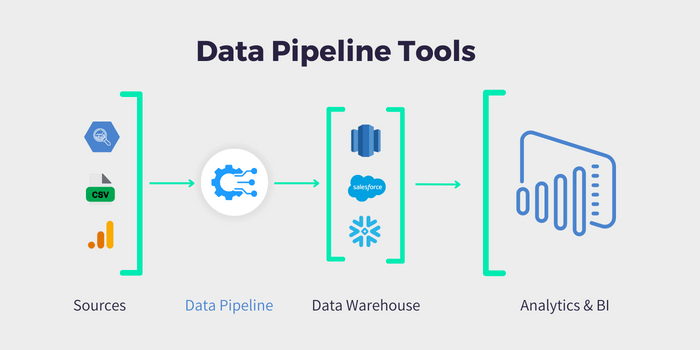

Core Components of Loadcatvtonpipeline – Understand the structure!

The core components of Loadcatvtonpipeline work together to ensure smooth and efficient data management. Below are its key elements:

- Data Loading: Efficiently imports raw data from various sources like databases, APIs, or file systems. This ensures a smooth start to the data processing pipeline without bottlenecks.

- Data Categorization: Organizes and structures data for easy access and faster processing. Categorization helps in tagging and grouping information based on predefined criteria.

- Data Validation: Ensures the accuracy and reliability of data by identifying and correcting errors. Validation includes checks for consistency, format, and integrity.

- Data Transfer and Integration: Moves validated data securely to its destination. This step ensures seamless connectivity between systems, safeguarding data during transit.

How Loadcatvtonpipeline Differs from Traditional Data Pipelines – Know the difference!

Loadcatvtonpipeline stands out from traditional data pipelines due to its advanced features and modern capabilities.

| Feature | Loadcatvtonpipeline | Traditional Data Pipelines |

| Data Organization | Categorizes and organizes data for easy access. | Limited focus on organizing data. |

| Error Checking | Validates data to ensure it’s accurate and reliable. | Minimal error-checking processes. |

| Real-Time Processing | Handles data in real-time for faster results. | Mostly relies on slower batch processing. |

| Scalability | Easily manages large and growing data needs. | Struggles with handling big data loads. |

| Automation | Automates repetitive tasks to save time. | Requires more manual effort to operate. |

What Tools Are Used for Loadcatvtonpipeline Implementation?

ETL Tools

Purpose: Extract, clean, and load data into systems for further use.

Examples: Apache NiFi, Talend, and Informatica are commonly used ETL tools.

Data Integration Tools

Purpose: Help transfer data smoothly between different systems and applications.

Examples: Popular tools include Apache Kafka, MuleSoft, and Dell Boomi.

Validation Tools

Purpose: Check data for errors to ensure it’s accurate and reliable.

Examples: Tools like Great Expectations and DataCleaner are often used.

Cloud Platforms

Purpose: Offer scalable and flexible environments for processing large amounts of data.

Examples: AWS Data Pipeline, Google Dataflow, and Azure Data Factory are popular options.

Automation Tools

Purpose: Automate tasks and make workflows faster and more efficient.

Examples: Apache Airflow and Prefect are widely used for automation.

Getting Started with Loadcatvtonpipeline – Start now!

- Understand the Purpose: Define the goals of your pipeline and the type of data you will manage.

- Identify Data Sources: Pinpoint where your data is coming from, such as databases, APIs, or file systems.

- Plan Data Flow: Map out how data will move through the pipeline, including loading, categorizing, validating, and transferring.

- Select the Right Tools: Use tools like ETL platforms, validation software, and integration systems tailored to your needs.

- Set Up and Configure: Configure the tools to handle data efficiently, ensuring smooth operations and minimal errors.

- Test and Optimize: Run tests to identify bottlenecks and optimize the pipeline for better performance.

What Challenges Are Commonly Faced When Implementing Loadcatvtonpipeline?

- Complex Setup: Designing and configuring a robust pipeline requires expertise and careful planning.

- Data Diversity: Managing multiple formats and sources can be challenging and may require custom solutions.

- High Costs: Advanced tools, infrastructure, and skilled personnel can lead to significant expenses.

- Latency Issues: Ensuring real-time processing without delays demands continuous optimization.

- Scalability Concerns: Adapting to growing data volumes can strain resources if not planned properly.

- Security Risks: Protecting data during transfer and integration is critical to avoid breaches.

Overcoming these challenges involves using the right tools, planning effectively, and regularly testing the pipeline for improvements.

How Loadcatvtonpipeline Ensures Data Accuracy – Ensure reliability!

Loadcatvtonpipeline ensures data accuracy by performing validation checks like syntax, format, and range verification. These checks help detect and fix errors early, ensuring the data is reliable. It also uses consistency rules to maintain uniformity across all stages of data processing.

By automating tasks such as categorization, Loadcatvtonpipeline reduces manual errors and speeds up workflows. Additionally, it includes error detection systems and regular monitoring to spot and resolve issues quickly, ensuring the data remains accurate and trustworthy.

Industries That Benefit the Most from Loadcatvtonpipeline – Find your fit!

- E-commerce: Streamlines inventory management, order processing, and customer data integration for a seamless shopping experience.

- Healthcare: Manages patient records, lab results, and compliance with privacy regulations, ensuring accurate and secure data handling.

- Finance: Processes transactions, conducts risk analysis, and detects fraud efficiently to maintain trust and security.

- Logistics: Optimizes supply chains, tracks shipments, and improves delivery efficiency through better data management.

- Technology: Handles massive data flows for applications like AI, machine learning, and cloud computing, enhancing innovation and scalability.

These industries rely on the Loadcatvtonpipeline to improve efficiency, accuracy, and decision-making.

Is Loadcatvtonpipeline Suitable for Small Businesses? – Check suitability!

Yes, Loadcatvtonpipeline works well for small businesses, especially those managing increasing data. It helps organize, validate, and transfer data efficiently, reducing manual work and saving time. Scalable tools make it affordable and adaptable to smaller operations.

By automating tasks, Loadcatvtonpipeline allows small businesses to focus on their goals without worrying about data errors. This system improves accuracy and speeds up processes, giving small companies a chance to stay competitive in their industry.

Future Trends in Loadcatvtonpipeline – Stay ahead!

- Increased Automation: Future pipelines will rely more on AI and machine learning to automate tasks like data validation, categorization, and error correction, making processes faster and more accurate.

- Real-Time Processing: The demand for real-time insights will push Loadcatvtonpipeline systems to adopt faster and more efficient processing capabilities.

- Cloud Integration: Cloud-based pipelines will become the norm, offering scalability, flexibility, and cost savings for businesses of all sizes.

- Enhanced Security: Stronger encryption and compliance measures will be implemented to safeguard data in transit and storage, ensuring privacy and trust.

- IoT and Big Data Support: With the growth of IoT and big data, Loadcatvtonpipeline systems will evolve to handle massive, diverse, and continuous data streams effectively.

These trends will shape the future of Loadcatvtonpipeline, making it indispensable for businesses aiming to stay ahead in data management.

Frequently Asked Questions:

Why is Loadcatvtonpipeline important?

It ensures smooth data management, reduces errors, and improves efficiency, making it essential for industries that handle large amounts of data.

Can Loadcatvtonpipeline handle unstructured data?

Yes, it can manage unstructured data by using advanced categorization and validation tools to organize and process it effectively.

What role does AI play in the Loadcatvtonpipeline?

AI enhances automation, improves data categorization, and detects patterns or anomalies in real-time for better accuracy.

Is Loadcatvtonpipeline suitable for real-time data processing?

Yes, modern Loadcatvtonpipeline systems are designed to handle real-time data streams efficiently.

What is the learning curve for implementing Loadcatvtonpipeline?

The complexity depends on the tools used, but user-friendly platforms reduce the learning curve significantly.

Conclusion:

Loadcatvtonpipeline is a smart way to handle data quickly and accurately. It helps businesses manage, organize, and transfer data without errors, making decision-making easier. From small businesses to large companies, this system boosts productivity and prepares them for future challenges. It’s a must-have tool in today’s data-driven world.